One of the key themes addressed at this year’s Big Data Belfast conference was Artificial Intelligence and Machine Learning. Over the years, the topic has remained a firm crowd favourite, appealing to those eager to hear about the latest innovations, and to those interested in seeing how AI & ML might impact their own business.

Artificial intelligence and machine learning are fast become an essential aspect of contemporary business operations. In a report published earlier this year, Gartner outlined that 14% of global CIOs have already deployed AI within their organisation and that up 48% would deploy it in 2019 or by 2020.

Recent developments within the artificial intelligence and machine learning space have enabled more organisations to incorporate these types of technologies into their core business strategy. One such development is ‘transfer learning’.

What is transfer learning?

Transfer learning is a machine learning method that involves reusing an existing, trained neural network, developed for one task, as the foundation for another task. At the moment, the two most common applications of transfer learning are text and image processing.

What is the challenge of using transfer learning?

The main challenge of transfer learning is to retain the existing knowledge in the model while adapting the model to your own task. If you are too aggressive in adapting the model to your task then much of the existing knowledge can be lost — a phenomenon known as ‘catastrophic forgetting’. In this case, the model may perform very poorly on your task.

In recent years, transfer learning has become a much more feasible method for model training, thanks primarily to a number of strategies that have been developed within the deep learning community.

Why does transfer learning work so well with neural networks?

Transfer learning works with neural networks in a way that it does not with the simpler one-layer models such as logistic regression. This is because neural networks pass input data through a series of layers while the simpler models only use a single layer.

Each of these layers can be thought of as being a different representation of the initial data, where the data might be an image or a text document. Recent research shows that these layers generate a sequence of representations of the data. For example, with an image recognition network, the early layers in the network might capture the simplest features of the data such as the presence of edges or gradients in colour while later layers capture more complicated features that are composed of these simple features.

Transfer learning works with neural networks as the different layers of the network can be treated differently. The features learned by the earlier layers are so simple that they are universal. As such these early layers are typically retained in a near unchanged state from the original model. On the other hand, the highly task-specific later layers can be more readily overwritten or replaced entirely in the transfer process.

How do you use transfer learning in practice?

The first step in transfer learning is to download the pre-trained model. These models are held in open online repositories such as Tensorflow Hub. While an enormous computational resource is required to train these models initially the final model files are much smaller and often can be downloaded in seconds.

The second step in transfer learning is to replace the final layer of the model. Pre-trained models trained on the Imagenet dataset have 1,000 categories and the final layer is shaped accordingly. You replace this layer with a layer that is shaped according to the number of categories that you want to classify.

Original neural network

Layer One Edges

Layers Two Gradients

Layer Three Shapes

Layer Four Image identification

New neural network

Layer One Edges

Layers Two Gradients

Layer Three Shapes

Layer Four (New Layer) Is it a Car or a Motorbike?

The next step is to resume training the model with your own data. This step is known as ‘fine-turning’. New strategies for fine-tuning are continually emerging. Two related strategies that have been developed for transfer learning are i) layer freezing and ii) adaptive learning rates.

i) Layers freezing as the name suggests is the process of freezing layers within a trained model so that they remain completely unchanged as you fine-tune the model. It is the early layers of the model that capture more general features that are frozen while the later layers are allowed to adapt to your data. Once the layers have been unfrozen, adaptive learning rates help fine-tune the model and prevent instances of “Catastrophic forgetting”.

ii) Adaptive learning rates vary the weighting given to each of the layers to ensure that changes in early layers of the neural network are minor tweaks that help improve performance.

What are the benefits of transfer learning?

Transfer learning has enabled more organisations to incorporate artificial intelligence and machine learning into their core business strategy. The reduced financial, time and infrastructural costs have made artificial intelligence and machine learning more accessible than ever before.

Organisations no longer need to create dedicated deep learning models and can instead capitalise upon the expertise and models of others to provide the foundation upon which their solution is built.

Use case

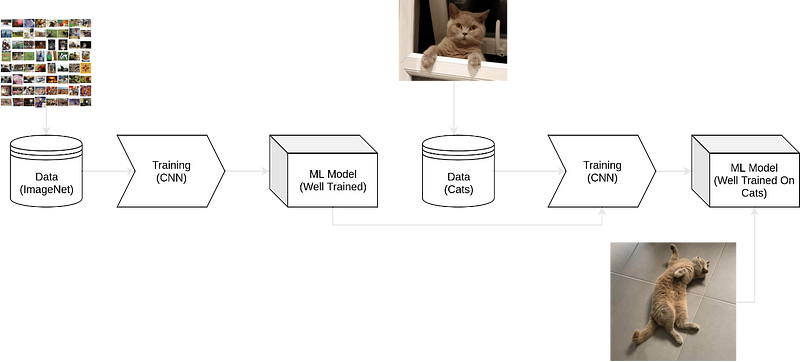

During his keynote at Big Data Belfast 2019, our CTO Alastair McKinley presented a simple transfer learning model, able to distinguish between a British Shorthair and a Grey Tabby, both a variety of cat.

Alastair’s model made use of a pre-trained image recognition model, after which he introduced new data (pictures of each type of cat) against a new image classification category.

Despite the simple nature of this example, it perfectly illustrates some of the key benefits transfer learning presents. Had Alastair chosen to create and train a model from scratch, infrastructure costs alone would have been astronomical.

Conclusion

Transfer learning enables organisations, that previously might not have been able, to access the opportunities presented by artificial intelligence and machine learning.

At Analytics Engines, we have developed a number of solutions that make use of transfer learning. They enable us to deliver solutions of greater complexity with greater efficiency.

DATO, a solution that we recently developed as part of an SBRI for Durham and Blaenau Gwent County Councils, makes use of an existing image recognition neural network, which has been retrained to specifically recognise public works issues.

At its core, transfer learning enables organisations to do more with much less…